If you’re looking to move from a proof of concept to a production-ready generative AI system, you need more than just larger models. You need infrastructure built to scale. Running large models like Llama 3.1 demands massive memory, ultra-fast data access and high-throughput compute to ensure low-latency performance under pressure. If you still use standard GPU setups, it may fall short due to limited memory, low bandwidth and power inefficiencies.

Without a purpose-built architecture, you risk low inference speeds, escalating operational costs and systems that can’t keep pace with real-world demands. However, opting for hardware alone is not enough; what matters more is how that power is delivered and optimised for your scaling workloads. That’s exactly what we discuss in our latest article below.

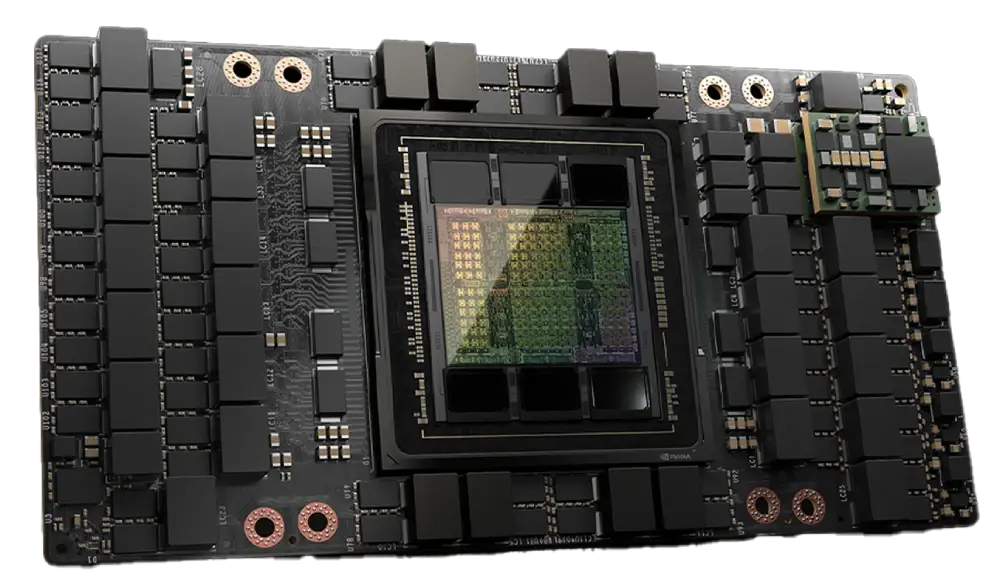

NVIDIA HGX H200 for Scalable Generative AI Workloads

If you are scaling your generative AI system, you already know how complex it can become. The way these systems grow, traditional infrastructure quickly becomes a challenge because:.

- Memory struggles to keep up with larger models.

- Bandwidth can't handle the growing data demands.

- Power inefficiency drives up operational costs.

- Latency affects real-time performance.

- Scalability limits growth potential.

- Resource inefficiencies slow down deployment.

That’s why enterprises are using the NVIDIA HGX H200 to build Generative AI systems at scale. The NVIDIA HGX H200 delivers faster performance with:

-

141GB HBM3e Memory: This ultra-fast memory allows AI systems to handle large model weights and long context windows without slowing down due to memory swaps, which is crucial for working with large language models like Llama 3.1 and GPT-3 at scale, especially when processing long prompts or handling high batch sizes.

-

4.8TB/s Memory Bandwidth: The high memory bandwidth eliminates bottlenecks in data access during both training and inference. This ensures faster iteration and quicker model deployment, essential for generative AI use cases like RAG, fine-tuning and vision-language models that demand rapid data retrieval.

-

4 PetaFLOPS FP8 Precision: With this level of performance, AI systems can efficiently run transformer-based models with lower-precision computations without losing accuracy. This boosts the scalability of generative AI for faster processing times and handling larger models without sacrificing output quality.

-

2X Faster LLM Inference: By delivering twice the inference speed of the NVIDIA H100, this supports generative AI systems in scaling to serve more users with lower latency. It allows enterprises to manage more concurrent requests while reducing operational costs, making generative AI services more efficient at scale.

NVIDIA HGX H200 Optimised for Enterprise Scale

Yes, the NVIDIA HGX H200 delivers exceptional performance for building Generative AI at scale. However, the AI Supercloud makes it even more exceptional with optimised NVIDIA HGX H200 GPU Clusters for AI, built to help businesses transition from pilot to production without friction.

Here’s how we do it:

Customised to Your Workload

We understand that every AI application is unique. Whether you’re deploying a retrieval-augmented generation (RAG) pipeline, training large foundation models or executing multi-modal inference at the edge, we customise:

-

Flexible Configurations: Tailor your deployment with the right mix of GPUs, CPUs, RAM, storage and middleware.

-

Personalised Performance Tuning: Get matched with the optimal setup based on workload type, be it training, inference, simulation, or fine-tuning.

-

Dedicated Technical Support: Our MLOps engineers are on hand to manage performance, scaling and continuous optimisation.

You get a solution optimised for the exact performance your application requires.

High-Speed Networking & Storage

Our NVIDIA HGX H200 is built for speed with:

-

NVIDIA Quantum-2 InfiniBand with 400Gb/s networking

-

NVIDIA NVLink with 900GB/s inter-GPU bandwidth

-

NVIDIA-certified WEKA storage with GPUDirect Storage support for ultra-low latency I/O

These technologies enable high-throughput data streaming, cutting down training times and accelerating insights.

Scale to Thousands of GPUs

Large Generative AI systems require massive computational power to train and fine-tune large models. With the ability to scale GPU resources efficiently, our infrastructure allows enterprises to rapidly deploy thousands of NVIDIA HGX H200 GPUs within as little as 8 weeks.

Why?

-

Rapid Scaling: Scaling GPU resources quickly ensures your system can handle the increasing demands of large model training and inference.

-

Accelerated Time-to-Market: The ability to deploy thousands of GPUs in a short timeframe will allow your businesses to bring AI products to market faster, staying ahead of competitors.

-

High-Performance Consistency: The NVIDIA HGX H200 is designed to handle the most complex Generative AI workloads, ensuring that performance remains optimal as your model and data requirements scale up.

End-to-End Services

With our end-to-end services, we ensure your enterprise's Generative AI workloads perform optimally:

- Fully Managed Kubernetes: Effortlessly automate and scale your AI workflows with enterprise-grade Kubernetes orchestration, optimised for the high-performance capabilities of the NVIDIA HGX H200.

- MLOps-as-a-Service: From model training to deployment, we provide end-to-end support to streamline the development of generative AI systems with NVIDIA HGX H200.

Liquid Cooling for Optimal Performance

Our NVIDIA HGX H200 systems are equipped with liquid cooling, ensuring optimal thermal management for large generative AI workloads. This maintains peak performance, reduces thermal throttling and supports the high demands of scaling AI systems.

With the AI Supercloud, you're not just accessing the power of NVIDIA HGX H200, you're building with a platform that is ready to scale, secure by design and built to deliver results from day one.

Book a Discovery Call and see how we can help you deploy generative AI at enterprise scale faster and smarter.

Explore Related Resources

FAQs

What is the NVIDIA HGX H200?

The NVIDIA HGX H200 is a high-performance GPU, built on Hopper architecture. The GPU is designed for large-scale AI workloads.

What is the memory bandwidth of the NVIDIA HGX H200?

The NVIDIA HGX H200 features 4.8TB/s of memory bandwidth for high-throughput data access for faster AI model training and inference, reducing bottlenecks and speeding up generative AI workloads.

How much memory does the NVIDIA HGX H200 have?

The NVIDIA HGX H200 comes equipped with 141GB of HBM3e memory, providing ample capacity to handle large models and high batch sizes for generative AI, ensuring faster training and lower latency.

How can I reserve the NVIDIA HGX H200?

Book a discovery call with our experts to reserve your NVIDIA HGX H200.